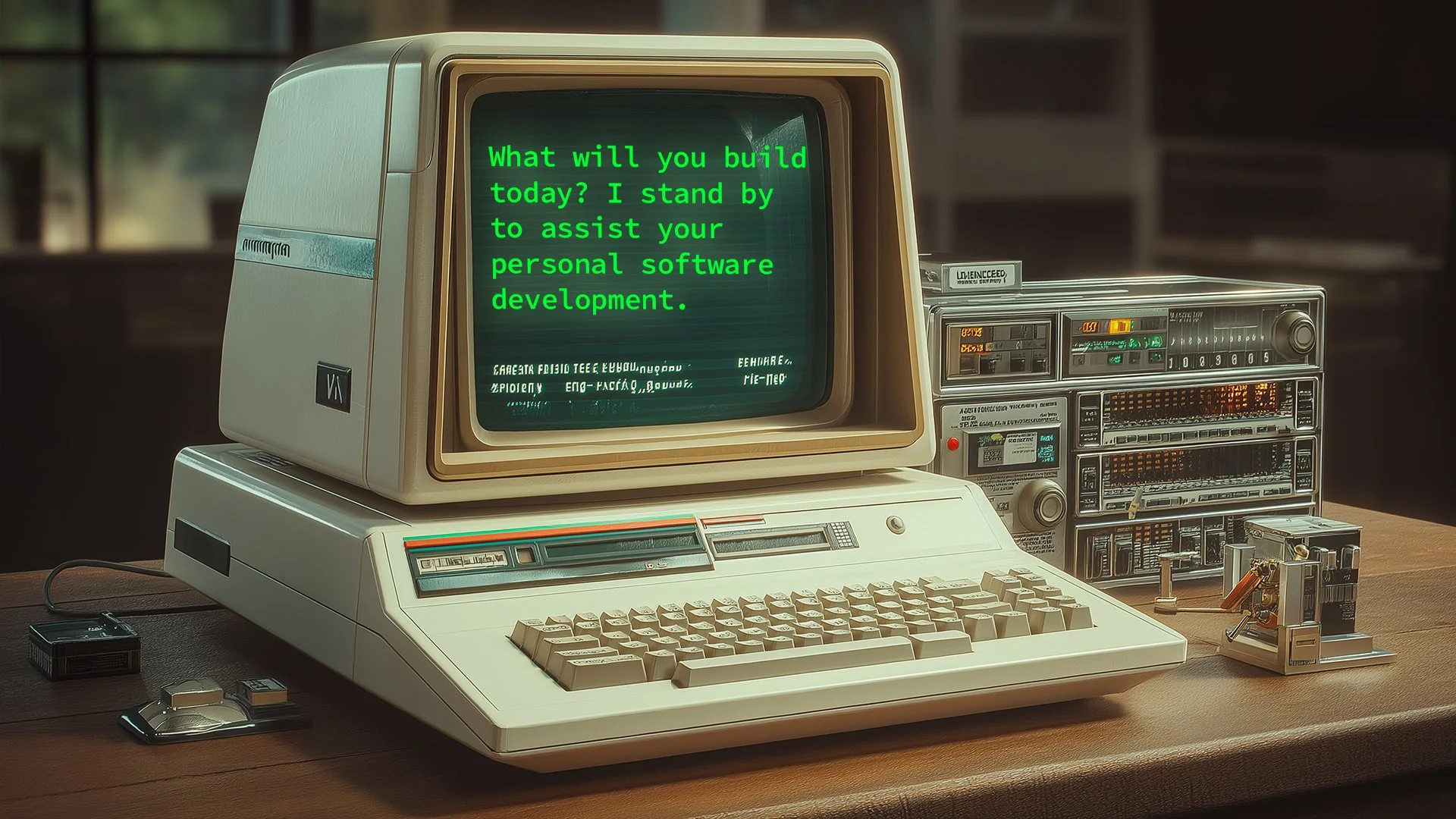

The Echo of Early Personal Computing

There was a brief, electric moment in the history of computing—roughly the late 1970s through the mid-1980s—when ordinary people could sit down at a keyboard and make a machine do something it hadn't done before. The Commodore 64, the BBC Micro, the Apple II: these were limited, clunky, and profoundly empowering. For a generation, they opened the door to a kind of creative agency that felt almost magical.

That door closed, gradually, as software became professionalized. The gap between what you could imagine and what you could build widened into a canyon. If you wanted a tool that didn't exist, you needed a developer—or you went without.

Vibe coding is reopening that door.

The term refers to the practice of describing what you want in natural language and letting a generative AI—tools like Claude, ChatGPT, or Copilot—write the code for you. No syntax to memorize. No debugging by hand. You describe your intent, and working software comes back in seconds.

In this episode of Modem Futura, we explore what this shift means—not just technically, but humanly. I demonstrates tools he built from single prompts (also referred to as a a ‘one-shot’): a horizon-scanning app for futures research and a two-by-two uncertainty matrix used in strategic foresight. Both were functional on the first attempt. Both took less time to create than it takes to describe them.

The Inherited Power Problem

But the episode resists the temptation to treat this as a simple good-news story. The hosts dig into the real tensions: AI-generated code that no one fully understands, security vulnerabilities baked into apps that reach market before anyone reviews them, the new threat landscape of prompt injection, and the philosophical question of wielding power you haven't earned the literacy to evaluate—what the hosts call "inherited power."

There are also rich implications for education. Rather than relying on off-the-shelf apps that never quite fit, instructors and students alike can now build purpose-specific tools—and in doing so, develop a more grounded understanding of what these AI systems can and cannot do.

The deeper question the episode surfaces is less about code and more about agency. For decades, software was something done to us—platforms we adapted to, interfaces we learned, ecosystems we bought into. Vibe coding hints at a possible reversal: software shaped by the individual, for the individual, in the moment they need it.

Whether that future is liberating or reckless—or both—depends on the kind of literacy, caution, and imagination we bring to it.

Listen to the full conversation on Modem Futura.

Subscribe and Connect!

Subscribe to Modem Futura wherever you get your podcasts and connect with us on LinkedIn. Drop a comment, pose a question, or challenge an idea—because the future isn’t something we watch happen, it’s something we build together. The medium may still be the massage, but we all have a hand in shaping how it touches tomorrow.

🎧 Apple Podcast: https://apple.co/4rbOr9r

🎧 Spotify: https://open.spotify.com/episode/28DMXJsM2kEBA2QDxuDmtJ?si=AJpR7zCpRgS2KCCfWwjjWg

📺 YouTube: https://youtu.be/lQGYaiThuBk?si=nRbHVEQk9dwL3gXr

🌐 Website: https://www.modemfutura.com/