How 1,300 experts see the world's greatest threats—and what their blind spots tell us

Each year, the World Economic Forum surveys over a thousand experts worldwide—business leaders, academics, policymakers, and institutional leaders—to map perceived global risks. The resulting Global Risks Report isn't a prediction of what will happen. It's something potentially more valuable: a snapshot of collective concern, a reading of the signals building across economic, environmental, technological, and societal domains.

The 2026 edition reveals tensions worth examining closely.

Short-Term Fears: The Present Pressing In

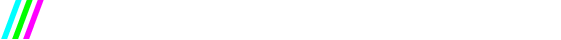

The two-year risk horizon is dominated by immediate geopolitical and informational concerns. Geoeconomic confrontation leads the list, having jumped eight positions from the previous year—a signal that trade conflicts, sanctions regimes, and economic nationalism have moved from background noise to foreground crisis for many observers.

Misinformation and disinformation hold second position, reflecting growing unease about information integrity in an age where AI-generated content becomes indistinguishable from authentic material and where social permission for deception seems to be expanding. Societal polarization follows in third place—and importantly, these three risks appear deeply interconnected. Misinformation accelerates polarization, polarization enables economic nationalism, economic nationalism generates more opportunities for information warfare.

Extreme weather events, state-based armed conflict, and cyber insecurity round out the top concerns for the immediate future.

Long-Term Concerns: The Environment Reasserts Itself

Expand the time horizon to ten years, and the risk landscape transforms. Environmental concerns claim five of the top ten positions, with extreme weather events, biodiversity loss and ecosystem collapse, and critical changes to Earth's systems occupying the top three spots.

This shift reveals something important about human risk perception: we consistently discount slow-moving catastrophes. Biodiversity loss lacks the urgency of trade wars, even though its cascading effects may ultimately prove more consequential. We've evolved to respond to immediate threats; we struggle to mobilize against dangers that unfold across decades.

Notably, societal polarization—ranked third in the short term—drops to ninth in the long-term view. Whether this reflects optimism that current divisions will heal, or simply the statistical reality that other risks seem more severe, remains an open question.

Different Lenses, Different Risks

Perhaps the report's most valuable contribution is its disaggregation of risk perception across demographics and geographies.

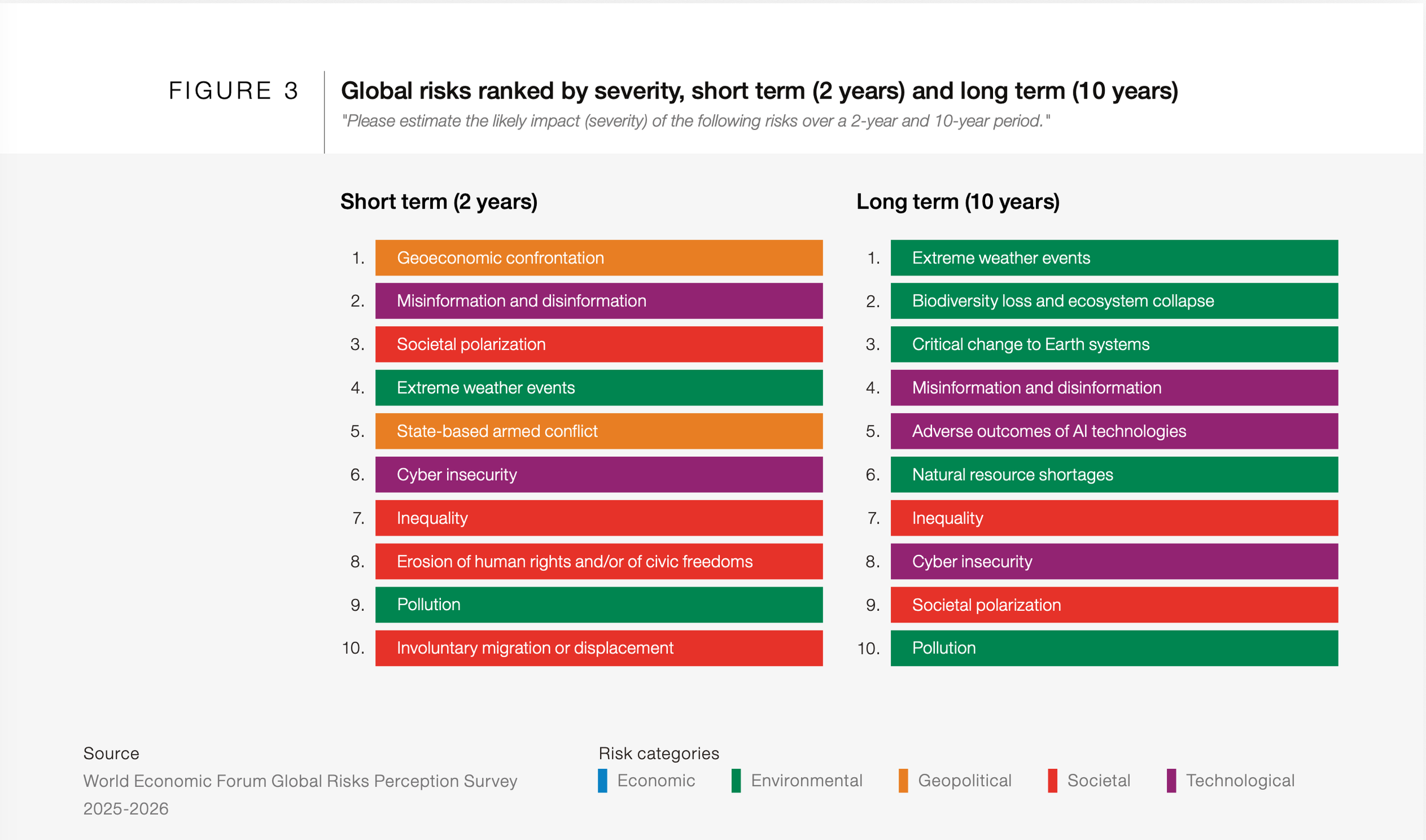

Age shapes perception. Respondents under 30 prioritize misinformation, extreme weather, and inequality. Those over 40 consistently rank geoeconomic confrontation as their primary concern. Generational experience matters: those who remember previous periods of great power competition read current signals differently than those encountering these dynamics for the first time.

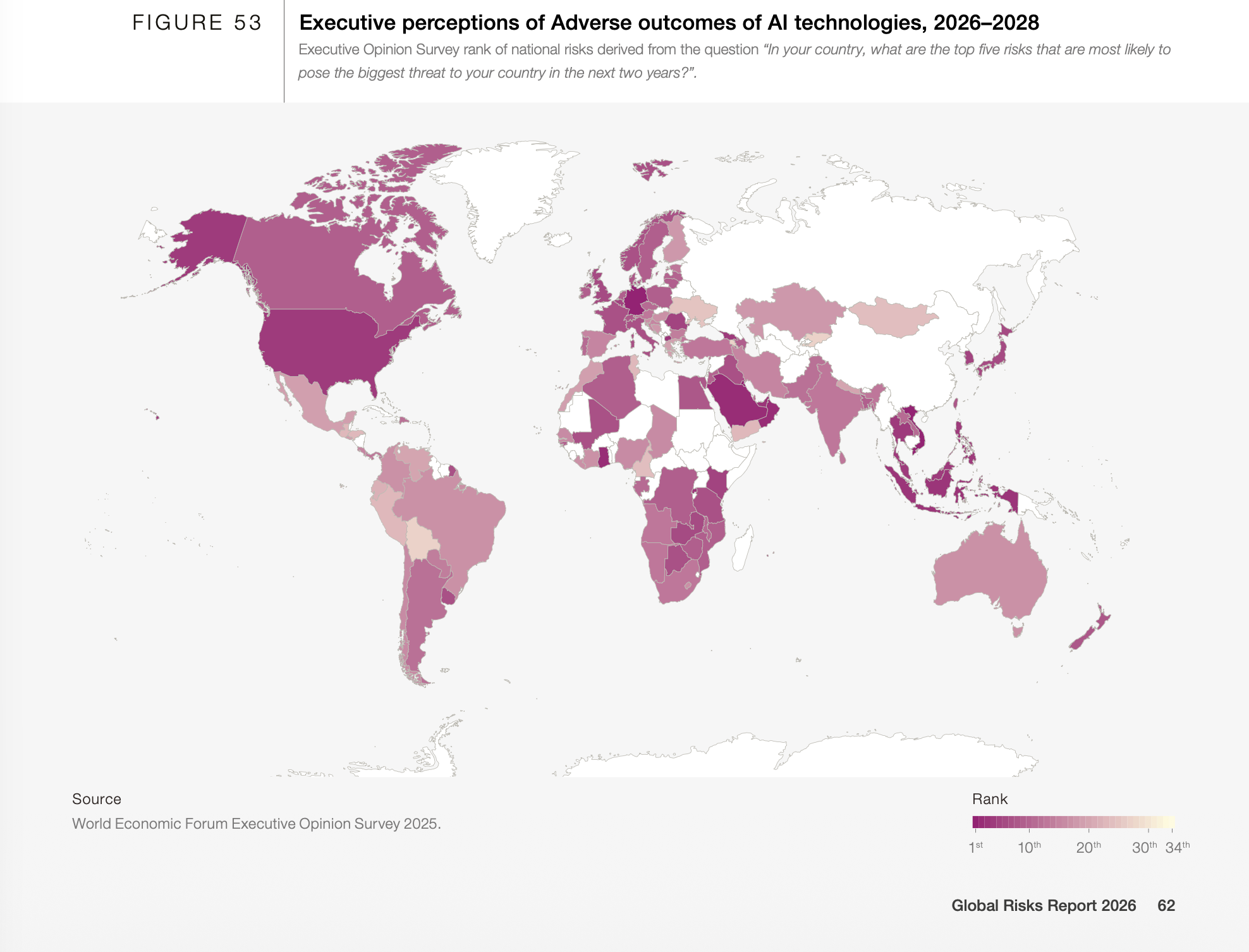

Geography shapes perception even more dramatically. AI risks that dominate American concerns rank 30th globally. In Brazil, Chile, and much of the world, more immediate concerns—inequality, pollution, resource access—take precedence. This isn't a failure of foresight; it's a reminder that risk is contextual. What threatens your community depends on where your community sits.

Using Signals, Not Consuming Forecasts

Reports like this serve best as prompts for reflection rather than prescriptions for action. The value lies not in accepting these rankings as authoritative, but in using them to surface questions:

What assumptions am I making about stability that geoeconomic confrontation might disrupt?

How might misinformation affect my organization, my industry, my community's cohesion?

Which long-term environmental risks am I discounting because they feel distant?

Whose risk perceptions am I ignoring because they don't match my own context?

Human beings are, as far as we know, the only species capable of anticipating futures and adjusting present behavior accordingly. That capacity for foresight is a genuine superpower—but only if we use it. Signals become valuable when they prompt better questions. The work isn't to predict what happens next; it's to prepare ourselves for navigating uncertainty with more wisdom than our instincts alone would allow.

Modem Futura explores the intersection of technology, society, and human futures.

Download the full WEF Global Risks Report 2026: [PDF Web Link]

Subscribe and Connect!

Subscribe to Modem Futura wherever you get your podcasts and connect with us on LinkedIn. Drop a comment, pose a question, or challenge an idea—because the future isn’t something we watch happen, it’s something we build together. The medium may still be the massage, but we all have a hand in shaping how it touches tomorrow.

🎧 Apple Podcast: https://apple.co/4sUwhdG

🎧 Spotify: https://open.spotify.com/episode/0UoLHYJa8KHzbNbP564Qwy?si=h9WD1rE4Q6WTu6wOWlEQhA

📺 YouTube: https://youtu.be/-5PQMaqweNU

🌐 Website: https://www.modemfutura.com/